Watch Robbie go headless

Lately, a few colleagues and me were brainstorming about a new concept for a customer, discovering new capabilities, and tinkering about innovations and how they could change the interaction with their users. It isn’t a new topic at all, but what if we could remove most of the user interface and focus on only those tasks people perform most? Via a device that’s always at hand? I can’t share the actual concept yet, but I can share the technology we’ve used to demonstrate the idea and show you how powerful it could be.

The concept

We came up with a Watch app, with a very minimalistic interface. A colored animation indicates mood and triggers interaction. Once you tap it, you can talk to it. Ask to perform a task, ask for help, request a reminder, anything you need. In the background, it will not only just fulfill your request, but also sense your emotion. Your state of mind. The app will understand what it is you want to do, but also if you need special attention (as a customer). This would trigger a personalized response. Spoken of course.

I know this is quite generic and might not seem to be a brilliant business case (although the concept is awesome, I promise). But this is a technical blog after all, and the thing that makes me wanna share this experience, is how easy it was to build this proof of concept. Not to mention the potential of this use case!

Remember…?

So, we need speech-to-text, intent recognition, emotion detection, profiling and personalization, and text-to-speech. And there it struck me: that’s exactly what Robbie can do, but via a different channel! For those who do not remember Robbie or never seen it, check out this blog post: https://robhabraken.nl/index.php/2817/can-you-be-my-friend-robbie/. Robbie is a real robot, whose interaction is fully built on Azure Cognitive Services and Sitecore, running on a Raspberry Pi with Windows 10 mobile.  He can identify faces and you can introduce yourself to him. He will keep track of all interactions on an xDB Experience Profile card and communicates only by voice. You can ask him stuff and he will respond in a personalized way, based on your gender, age and emotion. Just to show off what you can do with Sitecore xDB. I’ve built it together with Bas Lijten.

He can identify faces and you can introduce yourself to him. He will keep track of all interactions on an xDB Experience Profile card and communicates only by voice. You can ask him stuff and he will respond in a personalized way, based on your gender, age and emotion. Just to show off what you can do with Sitecore xDB. I’ve built it together with Bas Lijten.

The business case behind Robbie however, wasn’t that obvious. It demonstrated what Sitecore can do in terms of interactions and personalization, even on a new channel that did not yet exist. But a robot isn’t scalable very well, and it doesn’t relate to the daily challenges of a marketer. The technology behind is very useful though. And that is what I want to share today. Using a much more engaging use case.

How does it work?

As I said this is a prototype. So the app is quite simple and contains some shortcuts. Let’s assume we have a process to identify ourselves, because a watch is always owned by one user. This can be something in settings or configuration, but hard-coded for now. I have used my identifier from my conversations with Robbie for this demo. Additionally, this was my first watchOS app… I didn’t even own a Mac a few weeks ago, let alone I ever coded in Xcode. But a few nights of fiddling and some love from StackOverflow got that covered:

So how does it work? I have used Siri’s speech-to-text and text-to-speech capabilities, as we’ve utilized those of Windows when running Robbie on Windows 10 (mobile). This only takes a few lines of code. You can ask for input by showing suggestions in watchOS, but if you leave those empty, you’ll get presented a full on speech-to-text interface right away:

|

1 2 3 4 5 6 7 |

self.presentTextInputController(withSuggestions: [], allowedInputMode: .plain, completion: { (result) -> Void in if let answer = result?[0] as? String { self.analyseUtterance(utterance: answer) } }) |

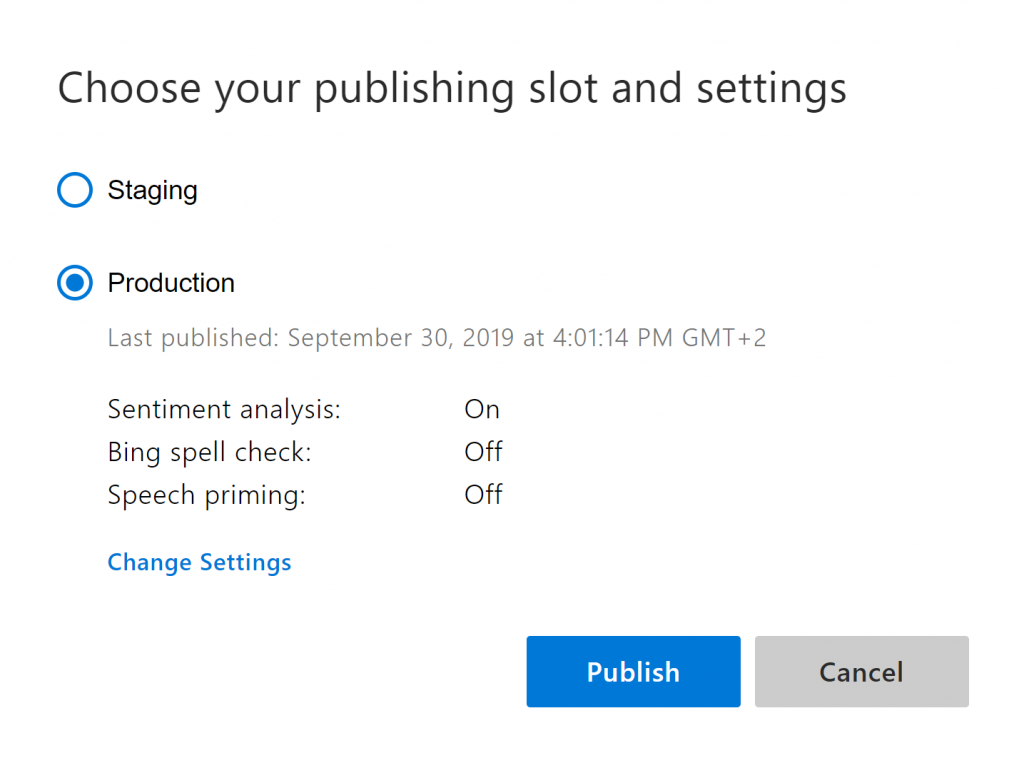

We now have an utterance we can analyse. But as I cannot observe the users emotion visually, I need to find another way. I came up with the idea to use Azure Text Analytics for that. This API can do sentiment analysis based on the wording of your sentence. Perfect for an open speech-to-text interface! And of course, I need to know your intent. What is it that you want to do? We can stick with LUIS for that. But while working on it I noticed that sentiment analysis now is also available within LUIS. If you click Publish, you can change the publish settings and turn on sentiment analysis:

This means we only need one API call to analyse our utterance (pardon the hacky and basic Swift code, be gentle on me, I now Alamofire could to a better job here):

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 |

func analyseUtterance(utterance: String) { let url = URL(string: "https://westus.api.cognitive.microsoft.com/luis/v2.0/apps/00000000-0000-0000-0000-000000000000")! var request = URLRequest(url: url) request.httpMethod = "POST" request.addValue("12345678901234567890123456789012", forHTTPHeaderField: "Ocp-Apim-Subscription-Key") request.addValue("application/json", forHTTPHeaderField: "Content-Type") request.cachePolicy = .reloadIgnoringLocalCacheData request.httpBody = ("\"" + utterance + "\"").data(using: .utf8) let task = URLSession.shared.dataTask(with: request) { data, response, error in guard let data = data, error == nil else { print(error?.localizedDescription ?? "No data") return } let jsonResponse = try? JSONSerialization.jsonObject(with: data, options: []) if let jsonResponse = jsonResponse as? [String: Any] { // parse result... } } task.resume() } |

And this is what we retrieve as a response from LUIS:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

{ "query": "can you tell me a joke?", "topScoringIntent": { "intent": "Joke", "score": 0.742633462 }, "entities": [], "sentimentAnalysis": { "label": "positive", "score": 0.795731843 } } |

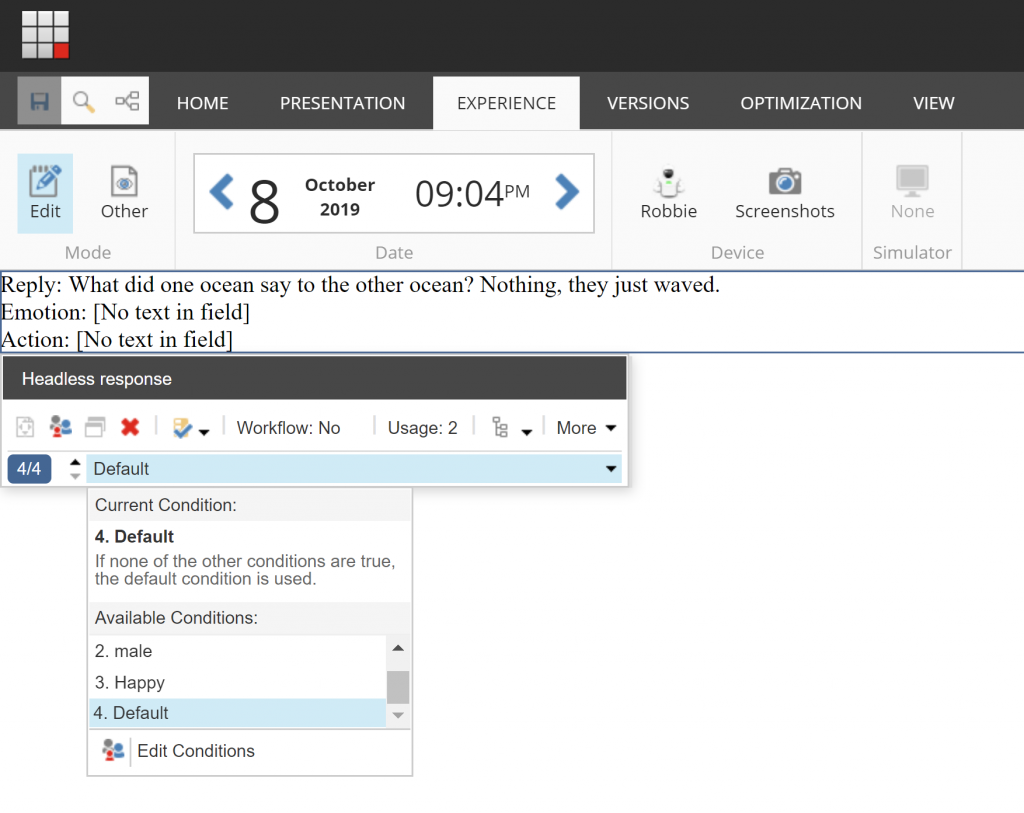

Having this information is where we make a leap in our demo, to the Robbie project: this Sitecore implementation already communicates headless via JSON and accepts profiling input too. So we can map this sentiment on an emotion and send it to Sitecore, while visiting the page associated with the discovered intent. Here we can personalize our experience in the regular way:

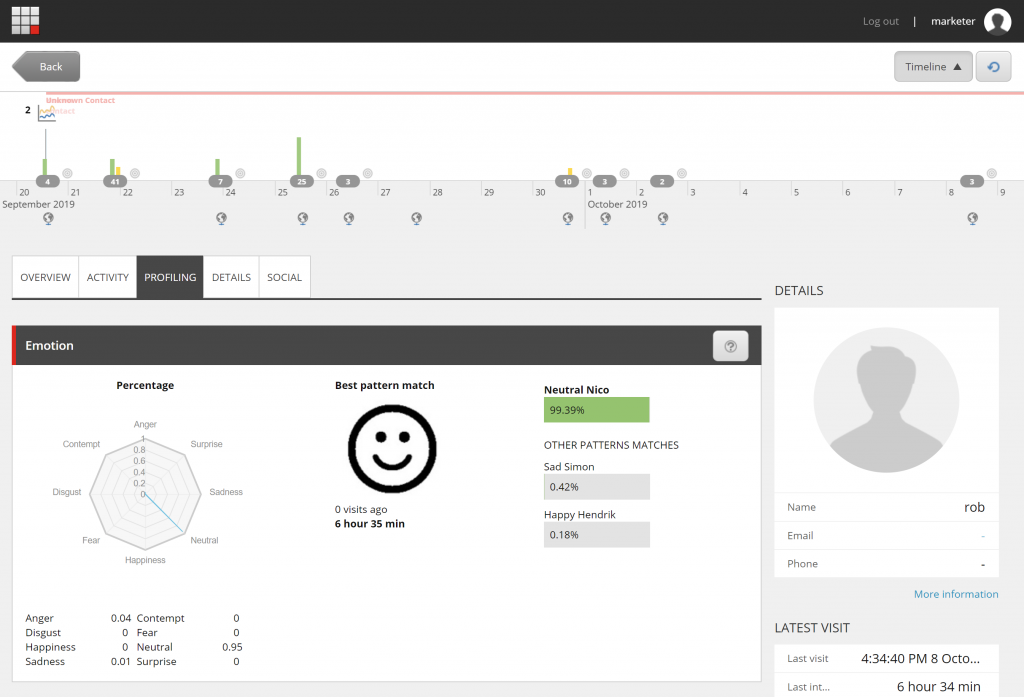

To finally track all the interactions with my smartwatch (!) in the corresponding Experience Profile, including profiling on my emotion:

How awesome is that? One more case proving there’s no limit in what you can do with Azure and Sitecore. Just dream it up. I would love to see some other use cases where a watch app has been connected to a Sitecore implementation, although I suspect it’ll be rare. But we are certainly going to explore these capabilities and build some more cool stuff!

Comments

Comments are disabled for this post